The Impact of OpenAI's DevDay

What Happened, Why It Matters & What Practitioners Are Saying

OpenAI held its inaugural OpenAI Dev Day yesterday, which was live-broadcasted to the general public and attended by ~900 guests. Over the course of the day, CEO Sam Altman shared significant announcements intended to strengthen both OpenAI and Microsoft’s positioning in the AI and cloud wars.

As I shared in my last post, enterprise adoption of AI products has largely been a tale of two cities — AI startups have raised over $15B in venture capital, but only 4% of enterprises have meaningfully deployed capital to projects with significant impact.

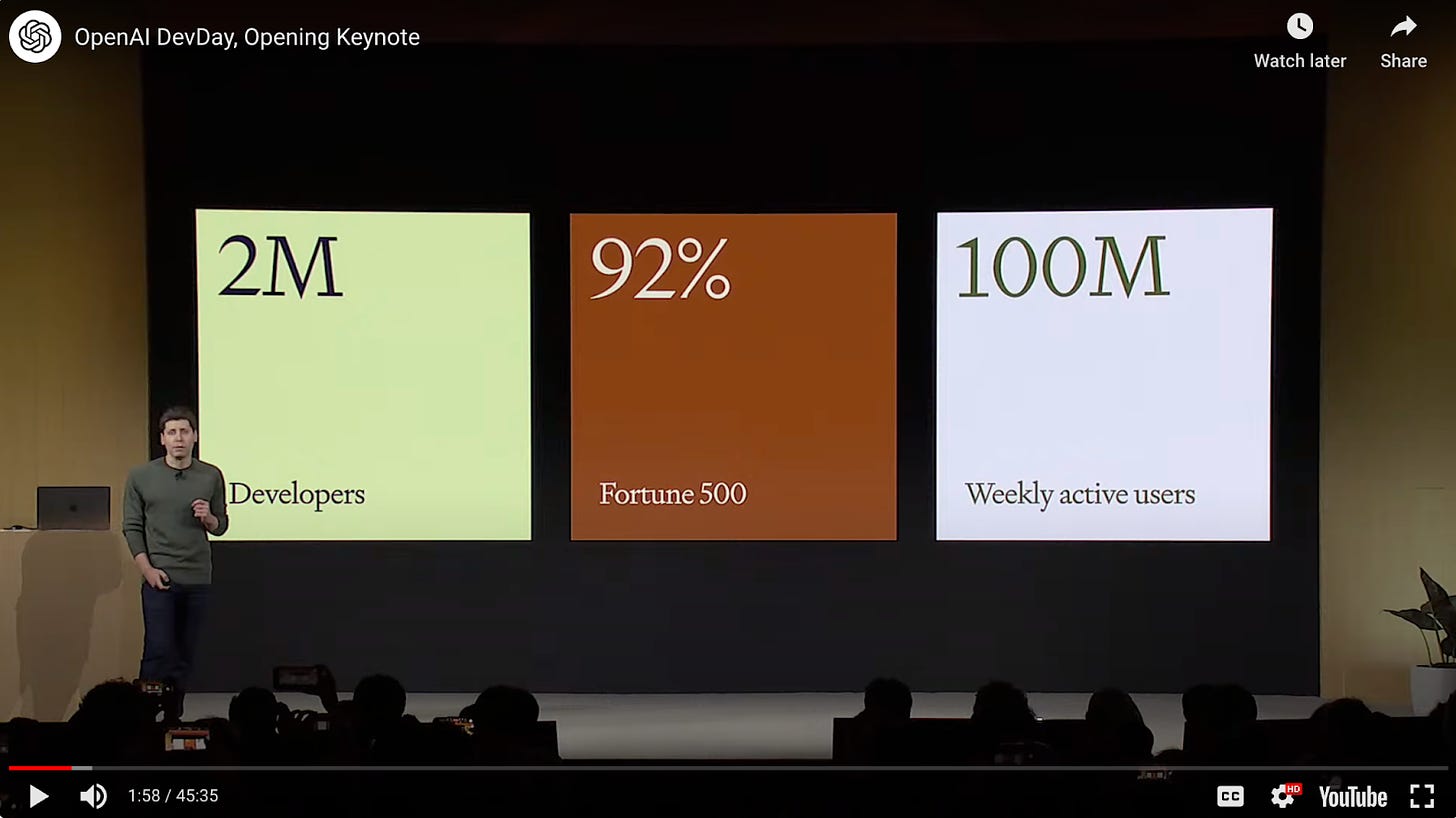

Despite the lack of enterprise adoption, it’s clear that ChatGPT, Open AI, and LLMs have captured the hearts and minds of the consumer and developer communities:

What Happened

Altman took the stage to talk about the progress OpenAI has made since launching ChatGPT one year ago and the new products they’re bringing to market:

GPT-4 Turbo: OpenAI unveiled their latest LLM, GPT-4 Turbo. The model features a significant increase in context length from 8-32k up to 128k tokens, has improved control over outputs, higher rate limits, and is ~3x cheaper than previous models. The decrease in price helps improve OpenAI’s reach and enhances accessibility for developers and businesses alike.

GPT-4’s Improved Control Over Outputs: OpenAI introduced JSON Mode, which ensures the model responds with a valid JSON and makes calling APIs much easier. Additionally, GPT-4’s much better at function calling than previous models and helps users create reproducible outputs, which will give users a higher degree of control over outputs of prompts. Perhaps most interestingly, GPT-4 Turbo improves retrieval capabilities in-platform enabling users to better bring knowledge from external documents and databases previously done via RAG (Retrieval Augmented Generation).

New Modalities: OpenAI is packaging Dall•E 3, GPT-4 Turbo with Vision, Whisper V3 all within the API. With GPT-4 Turbo, users can include images as inputs via API to help generate text outputs. Additionally, they’re adding natural sounding audio from text inputs in the ChatGPT app.

GPTs/Assistants API: OpenAI launched GPTs, a significant step forward in simplifying the integration of generative AI into various platforms, allowing users to tailor ChatGPT for specific purposes and tasks. The GPT store is rolling out later this month and will feature a revenue-sharing agreement for developers with the most successful applications.

New Modalities In the API: GPT-4 Turbo with Vision can accept images as inputs in the chat completions API, which can allow for caption generation, image and document analysis/search as well as navigation. The new Dall•E 3 model can now be integrated directly into apps. TTS is their new text-to-speech model series that generates human-quality speech from text with preset voices and two model variants that allow you to prioritize real time use cases or optimize for quality.

Custom Models: For organizations that need more fine-tuning, OpenAI can provide specialized services to work with organizations to create custom solutions. Organizations have exclusive access to the models created and will not be served to or shared with other customers or used to train other models.

Copyright Shield: Aimed at mitigating customer risk of copyright infringement, OpenAI will guarantee to pay costs incurred for legal claims which applies to generally available features of ChatGPT Enterprise and the developer platform.

Why It Matters

Given the increased competition in the LLM space, OpenAI’s Dev Day announcements are geared towards increasing lock-in to the OpenAI platform.

The GPTs and Assistant demos highlighted risk and security concerns regarding black box AI and vendor lock in that I previously cited as a risk to enterprise adoption. Additionally, with the introduction of Custom Models, organizations can pay $2-3M (and wait some amount of time) to create a privately hosted LLM with proprietary and custom data, potentially improving accuracy and insulating themselves from security concerns regarding sending data to OpenAI’s API. This also underscores OpenAI’s foray into LLM consulting engagements, a potentially lucrative strategy that’s pushed companies like MosaicML into the limelight and helped them get acquired by Databricks for $1.3B.

With both Custom Models and GPTs, OpenAI is expanding its TAM by enabling both technical and non-technical users to leverage OpenAI capabilities, whether that be through proprietary models, general models, or agent-like workflows.

Finally, and perhaps most importantly, yesterday’s developer conference showcased just how strong distribution can be when paired with ground-breaking technology. In one fell swoop, OpenAI created a graveyard of startups all aiming to upend one end of the LLM infra/development process. In this Hacker News thread, developers argue over which categories of AI have just been ruled obsolete thanks to OpenAI’s latest product drop.

What Practitioners Are Saying

I talked with a few Machine Learning Engineers in the space whose day-to-day operations are dependent on the advancements in the LLM space. Here’s what they had to say:

On Becoming the Next Hyperscaler:

OpenAI was paying close attention to the AI developer and startup ecosystem. They took the time to understand what was needed to extract value from an LLM ‘primitive’ - retrieval (RAG), prompt-engineering, and agent plug-ins to name a few - and released a suite of tools that position OpenAI to become the AWS for AI.

A long-list of infrastructure layer startups will be significantly impacted by the announcements. And with the introduction of GPTs, application-layer companies will need to get closer to their core customer in order to succeed. Meanwhile, multi-modal changes the work landscape for many ML engineers. As a long-time ML engineer myself, I wonder if the era of training or even fine-tuning bespoke deep learning models for text and vision tasks (like document processing) may already be coming to an end. The end result is better for the AI product and user.

Still, I have a note of caution. Today, there is hesitation to use OpenAIs products even if they’re best in class, given some developers dislike the product’s “closed” / “abstracted” nature and little has been done for use-cases where data cannot or shouldn't be sent over a network.

Looking at the big picture, even the simple improvements to LLMs is incredible. We now have access to extremely effective multi-modal APIs, super long context windows (128k tokens), cheaper inference, integrated code and API agents, creation of custom models, reproducibility and formatting. This will make building great products with AI at its core so much easier, but may make it more difficult to build products for AI development.

— Ceena Modarres, Formerly Senior Engineering Manager, Machine Learning at Hyperscience

On Improving the Developer Toolkit

Accessibility & Quality: GPTs are revolutionizing product development. By eliminating the need for users to manage history, retrieval, and UIs, they lower the barrier to entry for creating products. This ease doesn't compromise quality, thanks to the revenue-sharing model of the store. The absence of streaming UIs and message storage requirements significantly speeds up the development of proofs of concept.

Competitive Pricing & Features: OpenAI's new pricing structure and the enhanced capabilities of models and finetuning are game-changers. The Assistant API, additional finetuning hyperparameters, and reduced costs all contribute to products that are inherently superior to previous techniques. OpenAI's technological moats, scaling efficiencies, and bespoke models for large enterprises intensify the competition for other model providers.

System Safety & Performance: While JSON mode doesn't impress me much, the improved accuracy in function calls signals safer system-level integrations. OpenAI's strategy for scalable constrained sampling with manageable performance costs is promising, with JSON mode being an initial step. Despite the support for special decoding by other open-source models, OpenAI maintains a lead, likely until logits are reintegrated into outputs. Curiosity remains about the performance metrics of JSON mode versus function calling.

— Jason Liu, AI Consultant, Formerly Machine Learning Engineer at Stitch Fix

On Shifting Perspectives of AI:

While much doom and gloom has been wrought over OpenAI killing AI startups, I think OpenAI has simply prepared the public's minds for what is possible and broadened the potential markets.

This is the same effect as ChatGPT. It’s an easy to use experience in front of really sophisticated and powerful tech that helps people contextualize how that tech can be used. While the baseline quality customers expect has now increased (and will continue to increase), it should also mean that they'll be more willing to pay for the increased quality and value they derive from LLM-driven apps. Finally, while OpenAI has raised the quality floor, the ceiling of building purely within their ecosystem is still pretty low - context-specific applications will be able to leverage their domain knowledge and integrations to improve their end-to-end experience.

Basically, OpenAI is a hyperscaler and all of the things they announced today “just make sense” and were previously predicted.

— Chris Hua, Formerly Senior Staff Machine Learning Engineer at Square

Yesterday’s announcements from OpenAI underscored just how quickly the industry is moving. In the twelve months since ChatGPT launched, we saw ChatGPT itself blow past 1M users in its first week, a billion dollar exit just 8 months later, tons of capital inflows from the venture capital ecosystem to hopeful AI startups, and the first LLM-enabled product reach $100M ARR. I’m excited to see what’s to come in the next twelve months!